Metaverse is all the rage now. The possibilities with this disruptive technology are endless with applications across industries and domains. With the immense attention that the technology is drawing to itself, there needs to be a talk about how AI Vision can benefit the technology and vice-versa – After all both these technologies are closely related.

But first, let’s talk about what Metaverse really is.

What is Metaverse?

The word Metaverse comes from the two words – Meta (meaning transcending) and the universe (relating to the synthetic environment linked to our physical surroundings). Not surprisingly, the name was coined by a novelist in a work of speculative fiction, back in 1992. The novel, named Snow Crash, was written by Neal Stephenson who described the Metaverse as a 3D virtual world where people exist as avatars and interact with software agents.

As the internet progressed and digital reality became a thing, avatars started becoming more common than ever. With games like Second Life, the craze for a virtual self in a virtual world became more prominent and pronounced. The game still has millions of active users worldwide.

Suggested Read: What Are Different Types of Data Processing?

However, with the era of social media, Metaverse was not going to stick with online gaming for long. As virtual reality and internet communities grew, there was clearly a demand for a place where people could virtually meet, as their avatars.

So, where does AI (Artificial Intelligence) Vision come into the picture?

The Role of AI (Artificial Intelligence) Vision in Metaverse

AI Vision plays as the sight of a machine, adding the right context to the visual feed that enables greater clarity to the machine as to what it’s seeing. It enables building human’s ability to experience the virtual world with more gratifying realism, providing an immersive experience that imitates the real world.

As Virtual Reality, Augmented Reality, and Mixed Reality expand as diverse technologies, focusing on more focused approaches and applications, AI Vision can provide the necessary tools to the extended reality products for processing, analyzing, and understanding visuals as digital images or videos to derive meaningful decisions and take actions.

This will help XR devices to identify and better understand visual information relating to users’ activities and physical surroundings. This opens doors to building more precise and accurate virtual or augmented reality environments. Moreover, using behavioral insights of users with AI Vision, spatial intelligence can be gained to improve the interaction between the user and the VR or AR experience.

Applications of AI Vision in Metaverse

Let’s walk through a few of the major applications of AI Vision in building a better Metaverse environment.

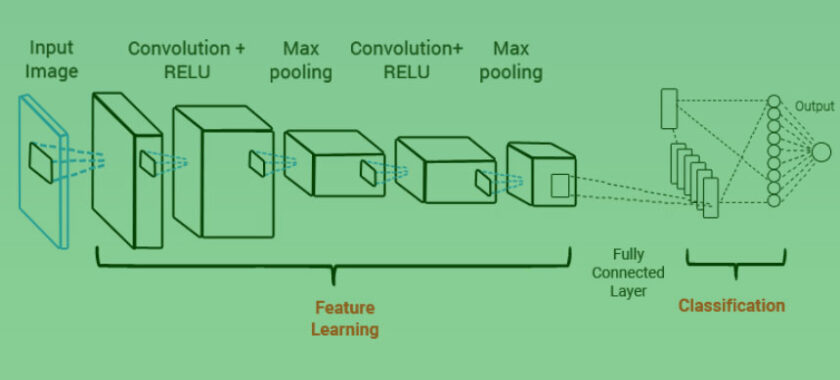

1. Image Classification

Classifying the images into the right categories helps machines identify the object in connection with its surroundings and not just as a bunch of pixels. With AI-based self-learning algorithms, the machine can be taught to identify the images more accurately as the technology keeps improving and updating.

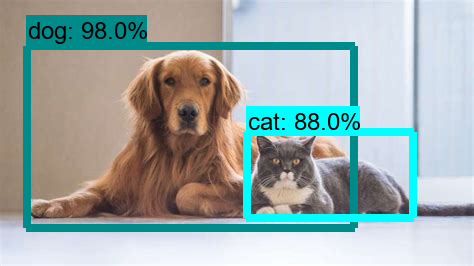

2. Object Detection

Identifying and detecting objects in a video or image can help the users move around in virtual environments with ease. Moreover, this information can make the immersion more realistic as it blends reality with the virtual world. A great example of this is the spatial computing that Magic One uses that enables virtual objects to hide behind real objects.

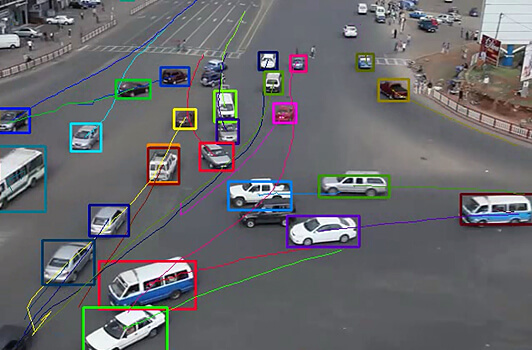

3. Object Tracking

As Metaverse is all about moving around in huge environments, it becomes crucial to track objects across many devices. With AI Vision, monitoring and tracking objects – whether real or virtual – becomes easier, simpler, and better.

Conclusion

Both AI Vision and Metaverse are technologies that are aggressively being researched and new developments come up every other day. As we move forward towards a more technologically advanced future, it remains to be seen how the interaction between these two technologies turns out and what more possibilities are uncovered.

Vikas Maurya is a professional blogger and Data analyst who writes about a variety of topics related to his niche, including data analysis and digital marketing.